- Record: found

- Abstract: found

- Article: found

An experimental search strategy retrieves more precise results than PubMed and Google for questions about medical interventions

Read this article at

Abstract

Objective. We compared the precision of a search strategy designed specifically to retrieve randomized controlled trials (RCTs) and systematic reviews of RCTs with search strategies designed for broader purposes.

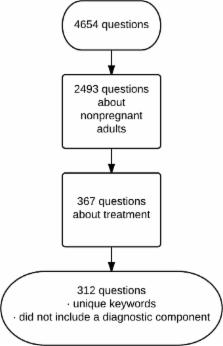

Methods. We designed an experimental search strategy that automatically revised searches up to five times by using increasingly restrictive queries as long at least 50 citations were retrieved. We compared the ability of the experimental and alternative strategies to retrieve studies relevant to 312 test questions. The primary outcome, search precision, was defined for each strategy as the proportion of relevant, high quality citations among the first 50 citations retrieved.

Results. The experimental strategy had the highest median precision (5.5%; interquartile range [IQR]: 0%–12%) followed by the narrow strategy of the PubMed Clinical Queries (4.0%; IQR: 0%–10%). The experimental strategy found the most high quality citations (median 2; IQR: 0–6) and was the strategy most likely to find at least one high quality citation (73% of searches; 95% confidence interval 68%–78%). All comparisons were statistically significant.

Conclusions. The experimental strategy performed the best in all outcomes although all strategies had low precision.

Related collections

Most cited references38

- Record: found

- Abstract: not found

- Book: not found

R: A Language and Environment for Statistical Computing.

- Record: found

- Abstract: found

- Article: not found