- Record: found

- Abstract: found

- Article: found

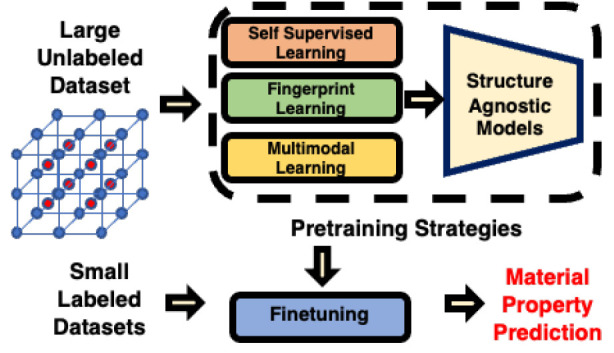

Pretraining Strategies for Structure Agnostic Material Property Prediction

Read this article at

Abstract

In recent years, machine learning (ML), especially graph neural network (GNN) models, has been successfully used for fast and accurate prediction of material properties. However, most ML models rely on relaxed crystal structures to develop descriptors for accurate predictions. Generating these relaxed crystal structures can be expensive and time-consuming, thus requiring an additional processing step for models that rely on them. To address this challenge, structure-agnostic methods have been developed, which use fixed-length descriptors engineered based on human knowledge about the material. However, the fixed-length descriptors are often hand-engineered and require extensive domain knowledge and generally are not used in the context of learnable models which are known to have a superior performance. Recent advancements have proposed learnable frameworks that can construct representations based on stoichiometry alone, allowing the flexibility of using deep learning frameworks as well as leveraging structure-agnostic learning. In this work, we propose three different pretraining strategies that can be used to pretrain these structure-agnostic, learnable frameworks to further improve the downstream material property prediction performance. We incorporate strategies such as self-supervised learning (SSL), fingerprint learning (FL), and multimodal learning (ML) and demonstrate their efficacy on downstream tasks for the Roost architecture, a popular structure-agnostic framework. Our results show significant improvement in small data sets and data efficiency in the larger data sets, underscoring the potential of our pretrain strategies that effectively leverage unlabeled data for accurate material property prediction.

Related collections

Most cited references55

- Record: found

- Abstract: not found

- Article: not found

Commentary: The Materials Project: A materials genome approach to accelerating materials innovation

- Record: found

- Abstract: found

- Article: not found

BERT: Pre-training of Deep Bidirectional Transformers for Language Understanding

- Record: found

- Abstract: not found

- Article: not found