- Record: found

- Abstract: found

- Article: found

Cognitive penetrability of scene representations based on horizontal image disparities

Read this article at

Abstract

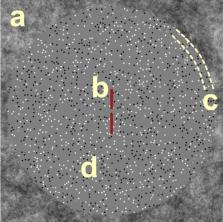

The structure of natural scenes is signaled by many visual cues. Principal amongst them are the binocular disparities created by the laterally separated viewpoints of the two eyes. Disparity cues are believed to be processed hierarchically, first in terms of local measurements of absolute disparity and second in terms of more global measurements of relative disparity that allow extraction of the depth structure of a scene. Psychophysical and oculomotor studies have suggested that relative disparities are particularly relevant to perception, whilst absolute disparities are not. Here, we compare neural responses to stimuli that isolate the absolute disparity cue with stimuli that contain additional relative disparity cues, using the high temporal resolution of EEG to determine the temporal order of absolute and relative disparity processing. By varying the observers’ task, we assess the extent to which each cue is cognitively penetrable. We find that absolute disparity is extracted before relative disparity, and that task effects arise only at or after the extraction of relative disparity. Our results indicate a hierarchy of disparity processing stages leading to the formation of a proto-object representation upon which higher cognitive processes can act.

Related collections

Most cited references68

- Record: found

- Abstract: found

- Article: not found

On the interpretation of weight vectors of linear models in multivariate neuroimaging.

- Record: found

- Abstract: found

- Article: not found

An alternative method for significance testing of waveform difference potentials.

- Record: found

- Abstract: not found

- Book: not found