- Record: found

- Abstract: found

- Article: found

Deep Learning based Radiomics (DLR) and its usage in noninvasive IDH1 prediction for low grade glioma

Read this article at

Abstract

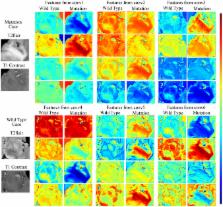

Deep learning-based radiomics (DLR) was developed to extract deep information from multiple modalities of magnetic resonance (MR) images. The performance of DLR for predicting the mutation status of isocitrate dehydrogenase 1 (IDH1) was validated in a dataset of 151 patients with low-grade glioma. A modified convolutional neural network (CNN) structure with 6 convolutional layers and a fully connected layer with 4096 neurons was used to segment tumors. Instead of calculating image features from segmented images, as typically performed for normal radiomics approaches, image features were obtained by normalizing the information of the last convolutional layers of the CNN. Fisher vector was used to encode the CNN features from image slices of different sizes. High-throughput features with dimensionality greater than 1.6*10 4 were obtained from the CNN. Paired t-tests and F-scores were used to select CNN features that were able to discriminate IDH1. With the same dataset, the area under the operating characteristic curve (AUC) of the normal radiomics method was 86% for IDH1 estimation, whereas for DLR the AUC was 92%. The AUC of IDH1 estimation was further improved to 95% using DLR based on multiple-modality MR images. DLR could be a powerful way to extract deep information from medical images.

Related collections

Most cited references16

- Record: found

- Abstract: found

- Article: not found

The Multimodal Brain Tumor Image Segmentation Benchmark (BRATS).

- Record: found

- Abstract: found

- Article: not found

A radiomics model from joint FDG-PET and MRI texture features for the prediction of lung metastases in soft-tissue sarcomas of the extremities

- Record: found

- Abstract: found

- Article: found